Altreva Adaptive Modeler™

Version 1.6.0

User’s Guide

Copyright © 2003 - 2020 Jim Witkam. All rights reserved.

Brief Contents:

1.1 Agent-based models and financial markets

1.2 How Adaptive Modeler uses agent-based models

1.3 Adaptive Modeler’s possibilities

1.7 Conventions and terminology

1.8 How to use this User’s Guide?

Lesson 2: Model initialization

Lesson 5: Evaluating forecasting abilities

Lesson 7: Creating your own models

3. How does Adaptive Modeler work?

4.2 Quote file format requirements

5.2 Agent-based Model parameters

5.6 Saving and restoring model configurations

6.1 Controlling model evolution

6.11 Customizing the User Interface

6.13 Computation performance issues

7.1 Evaluating forecasting success

7.2 Using the Trading Simulator

8.4 Recomputable vs. non-recomputable data series

10. Batch processing and automation

10.3 Saving and opening batch settings

10.4 Starting a batch from the command line

I.2 Agent-based Model data series

I.3 Trading System data series

III.1 General introduction to genetic programming

III.2 Genetic programming in Adaptive Modeler

Full Contents:

1.1 Agent-based models and financial markets

1.2 How Adaptive Modeler uses agent-based models

1.3 Adaptive Modeler’s possibilities

1.7 Conventions and terminology

1.7.1 “Securities” and “shares”

1.7.2 Market data (“bar”, “quote”, “close”)

1.8 How to use this User’s Guide?

Lesson 2: Model initialization

Lesson 5: Evaluating forecasting abilities

Lesson 7: Creating your own models

3. How does Adaptive Modeler work?

3.1.4.1 Running multiple model evolutions

3.2.1 Trading Signal Generator

4.1.3 Accepted quote intervals

4.1.4 Quote bar timing convention

4.1.6 Missing or irregular quotes

4.2 Quote file format requirements

4.2.1 Quote files without header

4.2.9 Interpretation of empty fields

4.2.10 Miscellaneous requirements

5.1.1 Quote history file of security

5.1.2 Security name/description

5.1.4 Model evolution start date and time

5.1.5.1 Handling changes in Market Trading Hours

5.1.6 Pause model after creation

5.2 Agent-based Model parameters

5.2.3 Minimum position increment

5.2.5 Broker commission (for agents)

5.3.3 Minimum initial genome depth

5.3.4 Maximum initial genome depth

5.3.5 Preferred minimum number of nodes in crossover operations

5.3.6 Genome creation gene selection

5.3.6.1 Genome Creation and Mutation Tester

5.5.2 Significant Forecast Range

5.5.3 Generate Cash Signal when forecast is outside range

5.5.4 Generate Cash Signal at market close

5.5.7 Enable Trading Simulator

5.5.11 Specify spread and slippage in % or points

5.5.13 Average slippage or price improvement

5.6 Saving and restoring model configurations

6.1 Controlling model evolution

6.3.2 Adding data series to an existing chart

6.3.4 Removing data series from a chart

6.3.5 Scrolling through charts

6.3.9 Adding an autocorrelation indicator

6.3.11 Showing the data overlay

6.3.13 X-axis for time series charts

6.3.13.2 Positioning and meaning of X-Gridlines

6.3.14 X-axis for distribution series charts

6.3.14.3 Positioning and meaning of X-gridlines and labels

6.6.3 Using the Z (color) dimension

6.6.4 Changing the axes ranges

6.6.5 Changing the gridline intervals

6.6.6 Showing the data overlay

6.6.7 Showing correlation and regression

6.6.8 Setting the agent dot size

6.7.1.2 Sub period size (and compounding interval)

6.9.1 Showing an agent’s genome

6.11 Customizing the User Interface

6.11.3 Hiding or closing a window

6.11.8 Creating window instances

6.11.9 Deleting a window instance

6.11.10 Renaming a window instance

6.13 Computation performance issues

7.1 Evaluating forecasting success

7.2 Using the Trading Simulator

8.4 Recomputable vs. non-recomputable data series

9.1.1 Selecting data series to export

9.1.2 Selecting the export file

9.1.3 Export historical values

9.2.1 Adding data series to the selection

9.2.2 Removing data series from the selection

9.2.3 Exporting distribution data series

9.2.5 At what point in the Agent-based Model cycle are values exported?

9.2.6 Date and time values in the export file

10. Batch processing and automation

10.1.4 Number of models per security

10.1.7 Run numbers start value

10.1.8 Run models until end of quote file

10.1.9 Run models for a given number of bars

10.1.10 Export data at end of run

10.1.11 Save models at end of run

10.1.12 Pause models at end of run

10.1.13 Close models at end of run

10.3 Saving and opening batch settings

10.4 Starting a batch from the command line

I.2 Agent-based Model data series

I.2.10.1 Forecasted Price Change

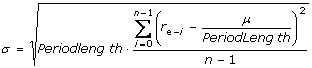

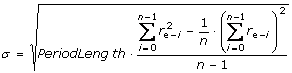

I.2.10.5 Root Mean Squared Error

I.2.10.6 Right / Wrong Forecasted Price Changes

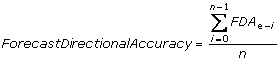

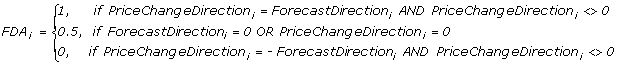

I.2.10.7 Forecast Directional Accuracy

I.2.10.8 Forecast Directional Significance

I.2.10.9 Forecast Directional Area Under Curve (FD AUC)

I.2.12.3.1 Average Agent Wealth

I.2.12.3.2 Rel Stdev Agent Wealth

I.2.12.3.3 Wealth Distribution

I.2.12.6.1 Population Position

I.2.12.6.2 Stdev Agent Position

I.2.12.6.3 Position Distribution

I.2.12.7.1 Breeding fitness return distribution

I.2.12.7.2 Breeding fitness excess return distribution

I.2.12.7.3 Replacement fitness return distribution

I.2.12.7.4 Replacement fitness excess return distribution

I.2.12.8.1 Average Agent Trade Duration

I.2.12.8.2 Rel Stdev Agent Trade Duration

I.2.12.8.3 Trade Duration Distribution

I.2.12.9.1 Average Agent Volatility

I.2.12.9.2 Rel Stdev Agent Volatility

I.2.12.9.3 Volatility Distribution

I.2.12.10.1 Average Agent Beta

I.2.12.11 Generation Distribution

I.2.12.12 Offspring Distribution

I.2.12.20.2 Gene occurring in Genomes

I.2.12.20.4 Gene evaluated in Genomes

I.2.12.21 Average Nodes Crossed

I.2.12.22 Average Nodes Mutated

I.2.13.4 Cumulative excess return

I.2.13.5 Breeding fitness return

I.2.13.6 Breeding fitness excess return

I.2.13.7 Replacement fitness return

I.2.13.8 Replacement fitness excess return

I.3 Trading System data series

I.3.2.15.3 Average trade return

I.3.2.15.5 Average trade duration

I.3.3.3 Monte Carlo Simulation

III.1 General introduction to genetic programming

III.2 Genetic programming in Adaptive Modeler

III.2.2 Input of the trading rules

III.2.3 Output of the trading rules

III.2.5 Function and terminal set

III.2.5.12 IsMon, IsTue, IsWed, IsThu, IsFri

III.2.5.15 open, high, low, close

III.2.5.19 avgvol, minvol, maxvol

III.2.5.21 avgcustom, mincustom, maxcustom

III.2.5.22 >_quote, >_volume, >_custom, >_change

III.2.5.25 change_qt, change_vo, change_cu

III.2.5.44 if_quote, if_lag, if_change, etc.

1. Introduction

Adaptive Modeler is an application for creating agent-based market simulation models for price forecasting of real world market-traded securities such as stocks, ETFs, forex currency pairs, cryptocurrencies, commodities and other markets.

1.1 Agent-based models and financial markets

An agent-based model is a bottom-up simulation of the actions and interactions of multiple individuals and organizations for the purpose of analyzing the (emergent) effects on a system as a whole. An agent-based model of a financial market may consist of a population of agents (representing traders/investors) and a price discovery and clearing mechanism (representing a market).

Agent-based models have shown to be able to explain the behavior of financial markets better than traditional financial models[1]. Conventionally, financial markets have been studied using analytical mathematics and econometric models mostly based on a generalization of supposedly rational market participants (representative rational agent models). However, the empirical features of financial markets can not be fully explained by such models. In reality, market prices are established by a large diversity of boundedly rational (learning) traders with different decision making methods and different characteristics (such as risk preference and time horizon). The complex dynamics of these heterogeneous traders and the resulting price formation require a simulation model consisting of multiple heterogeneous agents and a virtual (artificial) market.

Research has shown that complex behavior as seen in actual markets can emerge from simulations of agents with relatively simple decision rules. Commonly observed empirical features of financial time series (so called “stylized facts” such as fat tails in return distributions and volatility clustering) that have confronted the Efficient Market Hypothesis, have successfully been reproduced in agent-based models[2].

1.2 How Adaptive Modeler uses agent-based models

Adaptive Modeler creates an agent-based market model for a user selected real world security. The model consists of a population of thousands of trader agents, each with their own trading rule, and a virtual market. Adaptive Modeler then evolves this model step by step while feeding it with market prices of the security and (optionally) other variables.

After every received market price, the agents evaluate their trading rule and place buy or sell orders on the Virtual Market where a clearing price is determined and orders are executed. Agents and their trading rules evolve through adaptive genetic programming. Agents with poor performance are being replaced by new agents with a new trading rule that is created by recombining and mutating trading rules of well performing agents.

Self-organization through the evolution of agents and the resulting price dynamics drives the model to learn to recognize and anticipate recurring price patterns while adapting to changing market behavior. Model evolution never ends. When all historical market prices have been processed, the model waits for new price data to become available and then evolves further. The model thus evolves in parallel with the real world market. After every processed price the model generates a forecast for the next bar (or tick) based on the behavior of the Virtual Market. Trading signals are generated based on the forecasts and the user’s trading preferences.

1.3 Adaptive Modeler’s possibilities

Models generate trading signals for a single selected security. Trading signals are based on one-bar-ahead (or one-tick-ahead) price forecasts that are calculated after every received quote bar (or tick). Adaptive Modeler supports quote intervals ranging from 1 millisecond to multiple days as well as variable intervals. The only limiting factors are the available market data and processing speed.

Adaptive Modeler uses evolutionary computation and learns over time. Therefore it is recommended to provide the model with some historical market data. Using at least 1,000 historical quotes is recommended. The more historical data is used, the more opportunity the model has to learn and the more information the user gets on how a model performs during different periods and market regimes. Also, only after a sufficient number of quotes has been processed, statistical significance can be attributed to any demonstrated forecasting accuracy.

Adaptive Modeler is primarily designed for active trading of stocks or stock indices (i.e. using futures or ETFs) with sufficient volatility and small spreads. Because signals tend to switch frequently, Adaptive Modeler is suitable for day trading or swing trading strategies amongst others. Other markets such as forex currency pairs, commodities, Bitcoin or other cryptocurrencies can also be used because in principle Adaptive Modeler can process any sort of time series. In general, the volatility on the used quote interval must be high enough to cover transaction costs (broker commissions, spread and slippage). Otherwise, (simulated) trading performance will be poor even with high forecasting accuracy. For example, it will be more difficult to achieve good performance with a 1-minute interval than with a daily interval because the 1-minute price changes may be too small to cover transaction costs. This means that the break-even forecast accuracy rate for a 1-minute interval is higher than for a daily interval.

Adaptive Modeler provides an extensive set of output data and visualization tools to observe the historical evolution of a model, its current behavior and the accuracy and significance of previous forecasts and trading signals. Also it is possible to simulate trading and to project the likely range of returns under given assumptions. Extensive performance analysis is available to study risk and return measures of simulated trading. However, as for any system that aims to make predictions about the future, there is no guarantee that any demonstrated forecasting success or trading performance will remain the same in the future. Past performance or results implied, shown or otherwise demonstrated by Adaptive Modeler can not guarantee future performance or results. The user should be aware of this and consider the risks and potential rewards of every investment or trading decision on its own merits.

Trading can be simulated according to user customizable trading parameters such as enabling/disabling short positions, close positions at the end of the day, expected spread and slippage, and others.

Adaptive Modeler does not contain built-in support for online market data feeds nor does it contain interfaces for automatic order placement with online brokers. Market data is imported from CSV files using a flexible and intelligent file reader and output data such as forecasts and trading signals can be exported to CSV files for further processing by other applications. Traders are encouraged to supplement Adaptive Modeler’s signals with their own order placement algorithms for actual automated trading.

1.4 Specifications

Some specifications and limitations of the different editions of Adaptive Modeler[3]:

|

Feature |

Evaluation Edition |

Standard Edition |

Professional Edition |

|

Forecasts and trading signals |

delayed[4] |

real-time |

real-time |

|

Maximum number of agents |

5,000 |

25,000 |

250,000 |

|

Maximum genome size |

1,024 |

4,096 |

4,096 |

|

Maximum genome depth |

20 |

20 |

unlimited[5] |

|

Maximum bars |

20,000 |

20,000 |

unlimited[6] |

|

Maximum bars stored[7] |

20,000 |

20,000 |

100,000 |

|

Manual Export (max bars) |

500 |

20,000 |

100,000 |

|

Auto Export |

No |

No |

Yes |

|

Batch Mode (max models) |

4[8] |

unlimited[9] |

unlimited[10] |

|

Custom input variables |

- |

- |

100 |

|

Configurable crossover |

No |

No |

Yes |

1.5 System requirements

The

system requirements for running Adaptive Modeler are:

- Windows 10, 8.1, 7 SP1, or Windows Server 2008 R2 SP1 or higher versions

- Microsoft .NET Framework 4.8 Runtime (already

installed on most systems)

- 2GB RAM (supports up to 25,000 agents;

for 250,000 agents 4GB is required)

Other requirements:

- (historical) market data of the security to be modeled

Recommended:

- market data feeds, downloaders and/or conversion tools that retrieve market

data and export it to Comma Separated Values (CSV) files

- fast CPU

Note: Running Adaptive Modeler on a Virtual Machine is not supported.

1.6 Installation

Installing Adaptive Modeler

1.

Uninstall any previous installation of Adaptive Modeler first.

2. Download Adaptive Modeler from the download page of the Altreva

website (Evaluation Edition) or from the personal download link provided by

Altreva Support (Standard and Professional Edition). In either case, select

“Save”.

3. Unzip the downloaded

file, run the installer file and follow the instructions on

screen.

Upgrading from previous versions

Users of the Evaluation Edition can download the latest version from the download page. Users of the Standard or Professional Edition should contact Support for upgrading instructions.

Please take note of the following before installing a new version of Adaptive Modeler:

- See

the latest Release

Notes

These may explain changes relevant to users of previous versions that are not evident from the user interface and may contain other important information. - Uninstall

previous installations

Previous installations of Adaptive Modeler must first be uninstalled. Also the Evaluation Edition must first be uninstalled before installing the Professional or Standard Edition.

1.7 Conventions and terminology

1.7.1 “Securities” and “shares”

The term “security” is used throughout Adaptive Modeler for the financial instrument or market that is being forecasted which can be a stock, ETF, forex pair, cryptocurrency, commodity, bond, future or something else. The term “shares” is used for security units and depending on the security type could refer to stock shares, currency units, contracts, etc.

1.7.2 Market data (“bar”, “quote”, “close”)

Adaptive Modeler accepts either OHLC (open/high/low/close) bars or tick data. In either case the term “bar” or “quote” is often used to indicate a set of prices (i.e. open/high/low/close or bid/ask/close) that form one row in a quote file. The term “bar” is also used to indicate the time interval of a single quote bar (the quote interval) or the processing step (and model evolution) of a single bar. The term “close” is often used to indicate the close price in case of OHLC bars or the trade price in case of tick data.

1.7.3 Currency

Adaptive Modeler does not use a currency symbol for money amounts. The currency of amounts such as start capital, costs, prices, etc. does not need to be specified and can thus be interpreted as the base currency of your choice.

All money amounts in Adaptive Modeler are considered to be of the same currency (except of course price related data when modeling foreign exchange prices and the quote currency of the currency pair is not the user’s base currency).

To model a non-forex security that is denominated in another currency than the user’s base currency, the security prices should be converted to the base currency before importing quotes, otherwise changes in the exchange rate would not be accounted for.

1.7.4 Dates

By default, Adaptive Modeler uses the US date convention (mm/dd/yy) for showing dates on screen and for exporting data to files. This date format can be changed to the European date convention (dd/mm/yy) through “Options…” from the “Tools” menu. Be aware that this date format has no effect on importing quotes. The date format used in the quote file (see Supported quote file formats) can be different than the date convention used for showing dates on screen and for exporting.

1.8 How to use this User’s Guide?

A Getting Started Tutorial is provided in chapter 2 for learning the basics of Adaptive Modeler. Chapter 3 describes the inner workings of Adaptive Modeler in more depth. Chapter 4 explains how to import market data in Adaptive Modeler and the supported file formats. Chapter 5 describes in detail how to configure a model and chapter 6 describes the various elements of Adaptive Modeler’s user interface. Some issues about trading are discussed in chapter 7. Chapters 8 to 10 deal with some advanced features such as exporting data and batches. Additional information is provided in appendices.

1.9 Getting help

Adaptive Modeler includes a help system that provides context-sensitive help in several parts of the application. When a help button (“?”) is shown in the top-right corner of a dialog box, context-sensitive help is available. By clicking the help button, a question mark will appear next to the mouse cursor. By clicking with the question mark on the desired element in the dialog box, the relevant section of the User’s Guide will be shown in a separate Help window. Alternatively, F1 can be pressed to get help for the active text box or control.

Context-sensitive help is available for:

- dialog boxes with a “?” button in the top-right corner

- the data series tree view (press Shift-F1 on the selected data series name)

- “Edit Data Series Parameters” dialog boxes (the Help button will give help about the data series)

- the Select Genes dialog box (press F1 on the selected gene or type cell)

In other parts of the application, the User’s Guide can be launched by clicking the help (“?”) button in the toolbar or by pressing F1.

Note: By pressing CTRL-F in the Help window, a search box will appear.

1.10 Examples

The examples that are presented in the Startup window demonstrate some of the possibilities of Adaptive Modeler. Besides the short mouse-over descriptions given in the Startup window, they are not explained in detail and may not be self-explanatory to new users. To start learning how to use Adaptive Modeler, it is recommended to follow the Getting Started Tutorial.

Advanced users can use the Styles and/or Configuration files that are used by the examples for their own models. They can be found in the Styles and Configuration folders. (The examples themselves are implemented as batch files).

2. Getting Started Tutorial

This tutorial covers Adaptive Modeler’s basic concepts and features. It shows how to create and configure a new model, how to follow its evolution with various charts and indicators, and how to evaluate forecasting abilities and trading performance. It also explains several user interface features. Advanced features outside the scope of this tutorial are explained elsewhere in the User’s Guide. Before starting this tutorial, it is recommended to read Chapter 1 of the User’s Guide.

This tutorial uses the S&P500 index as an example. It has been chosen for its stock market index leadership position, its long record of historical quotes and the existence of both a deep futures market and a closely correlated and highly liquid ETF (SPY). A quote file containing the historical daily quotes of the S&P500 cash index since 1950 is included[11].

Note: The screen shots show what you need to do, not the results. In some cases your screen may look slightly different than the screen shots due to version differences.

Lesson 1: Model configuration

To create a new model in Adaptive Modeler:

![]() Start Adaptive Modeler

Start Adaptive Modeler

![]() Click “New” in the Startup window

Click “New” in the Startup window

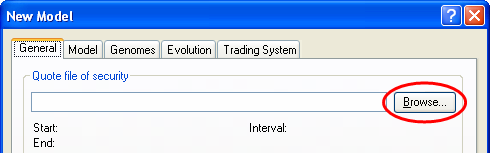

The “Model Configuration” dialog box will open, showing the “General” tab. The first thing to do is to select the quote file of the security that we are going to model. In this case we will use the included S&P500 index quote file.

![]() At “Quote file of security”, click

the “Browse…” button

At “Quote file of security”, click

the “Browse…” button

![]() In the “Browse” dialog box, open

“S&P500 (Day).csv” (in the Samples folder)

In the “Browse” dialog box, open

“S&P500 (Day).csv” (in the Samples folder)

The quote file will now be preprocessed to automatically detect the quote interval and some other settings. Note that the “Model evolution start date/time” is automatically set to a date shortly after the start of the quote file.

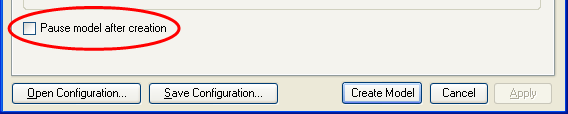

![]() Near the bottom of the dialog box,

check “Pause model after creation”

Near the bottom of the dialog box,

check “Pause model after creation”

This will allow us to observe the model’s initial state before starting model evolution.

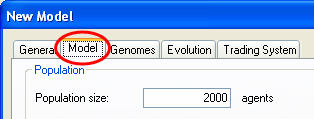

![]() Select the “Model” tab

Select the “Model” tab

The “Model” tab contains various parameters. We will leave these at their default values. Notice that the population will contain 2000 agents. We will also leave the tabs “Genomes” and “Evolution” unchanged.

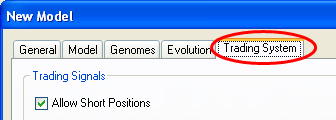

![]() Select the “Trading System” tab

Select the “Trading System” tab

In the top-left you will see that “Allow short positions” is checked. This means that long, short and cash positions can be advised. In the “Trading Simulator” area, note that “Auto start at bar:” is checked with the value “2500”. This means that the built-in trading simulator will automatically start trading after 2500 quote bars have been processed. (The first 2500 bars can be considered a “training” period in this case). We will leave these settings as they are.

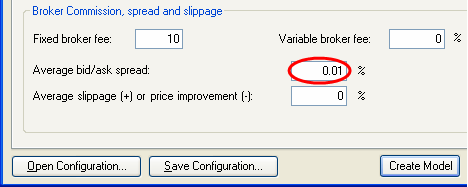

In the “Broker commission, spread and slippage” area we need to enter realistic transaction costs for the Trading Simulator. For SPY the spread is usually 1 cent which is about 0.01% of its value.

![]() At “Average bid/ask spread %”,

enter “0.01”

At “Average bid/ask spread %”,

enter “0.01”

We will leave the other values unchanged. This concludes our model’s configuration.

Lesson 2: Model initialization

You are about to create and initialize the model:

![]() Click the “Create Model” button of

the “Model Configuration” dialog box

Click the “Create Model” button of

the “Model Configuration” dialog box

After clicking “Create Model” the model will be created and initialized. In this case, a population of 2000 agents is created and each agent is given a random trading rule, a starting capital of 100,000 in cash and no shares. (Shares in this case are fictitious “S&P500 index” shares). Model initialization takes only a short time and is only visualized by a progress bar.

When model initialization is complete, the following windows have appeared:

· Data Series: an expandable tree view with all of Adaptive Modeler’s data series (more about this later)

· Current Values: some values at the current point in model evolution (such as the number of bars that have been processed which is still zero now)

· Trading Signals: a list of recent trading signals (still empty now)

· Charts: several charts such as the S&P500 index price (most charts are still empty now)

This is the default “style” of Adaptive Modeler. A style is a workspace layout that includes various presentation settings and preferences. Now however, we are going to use another style that was specifically designed for this tutorial:

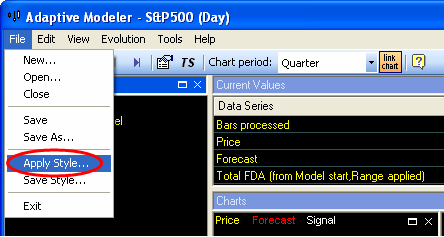

![]() From the “File” menu, choose

“Apply Style…”

From the “File” menu, choose

“Apply Style…”

![]() In the “Open” dialog box, open

“Tutorial.aps” (in the Styles folder)

In the “Open” dialog box, open

“Tutorial.aps” (in the Styles folder)

The workspace has now been changed. The Charts window is now called “Model” and contains some other charts.

Tip: You can disable the Tool Tips that keep popping up when you move the mouse across the screen by going to the “Tools” menu, select “Options…” and uncheck “Show User Interface Tool Tips”.

Let’s take a brief look at the initial state of the model before starting model evolution.

As said, all agents own 100,000 in cash and 0 shares. This is visible in the “Wealth Distribution” histogram chart in the top-left of the Model window. This histogram is now showing a single bar representing 2000 agents that all have a wealth of 100,000.

Because all agents have 0 shares at this point, their “position” is 0%. This is shown in the “Position Distribution” histogram chart (top-center). The position of an agent is the value of the shares it owns as a percentage of its wealth. A negative value indicates a short position.

The top-right chart is a “Genome Size Distribution” histogram. Genomes are the trading rules of agents. The chart already shows that the trading rules have different sizes. The size is measured in the number of “genes” that the genome consists of. Genes are the elementary functions and values that trading rules are constructed of. The average genome size is shown in yellow on the X-axis. Later we will look at individual agents and trading rules in more detail.

Since you will be using this model throughout the rest of this tutorial, it is a good idea to save it now in case you need to revert to this point later.

![]() Select “Save” from the “File” menu

and save the model at a location of your choice

Select “Save” from the “File” menu

and save the model at a location of your choice

Now that we are familiar with the initial state of the model, we are ready to start model evolution.

Lesson 3: Model evolution

The model is ready to start its evolution. During evolution the model will process the historical quotes in the quote file as live streaming data. Every quote bar is processed only once and in chronological order. (Model evolution doesn’t end when the quote file ends or when present day is reached. It continues when new quotes are added to the quote file and there is no difference between the way historical and new quotes are being processed).

We will first go through model evolution step by step, feeding it one quote bar at a time:

![]() In the toolbar, click the “step”

In the toolbar, click the “step” ![]() button (or press F4)

button (or press F4)

The model now evolves one step, starting at the “Model evolution start date/time” as set on the “General” tab of the model configuration (recall from Lesson 1). At every step, one quote bar is read from the quote file[12] and all agents evaluate their trading rule and place a buy or sell order on the Virtual Market. The Virtual Market (“VM”) calculates the clearing price and executes all executable orders. The clearing price (“VM Price”) is then taken as the forecast for the close of the next bar and a new trading signal is given if necessary. This process is repeated for every imported quote bar. A step usually takes only a fraction of a second.

Let’s see what exactly has changed after this first step. The first values have appeared in the charts “Buy Orders”, “Sell Orders”, “VM Trades”, “VM Volume” and “VM Price”. These reflect the numbers of buy and sell orders that have been placed, the resulting trades and the clearing price. More detailed market information such as market depth is also available but to keep things simple this is not covered in this tutorial.

The “Wealth Distribution” and “Position Distribution” histograms now show different wealth and position values for agents as a result of changes in their portfolios.

The forecast (which equals the VM Price) is shown in the Current Values window and also drawn in the bottom-right chart (in red). This chart also shows the security’s price in yellow (the S&P500 index in this case). A trading signal has been generated depending on whether the forecast is above or below the last security price and is shown in the Trading Signals window. The trading signal is also drawn in the bottom-right chart (in white) as an up or down arrow for “Long” or “Short” signals or a small circle for “Cash” signals[13].

Note: Depending on available space, the security price is shown in the chart as close lines or as OHLC bars.

Tip: You can maximize a chart or entire window to get a better view by

clicking the “maximize” ![]() button near its

top-right corner. Maximized charts or windows can be de-maximized with the

“de-maximize”

button near its

top-right corner. Maximized charts or windows can be de-maximized with the

“de-maximize” ![]() button. Depending on

your screen size, you may want to maximize a chart or window at some points

during this tutorial.

button. Depending on

your screen size, you may want to maximize a chart or window at some points

during this tutorial.

![]() Press F4 (or click the “step”

Press F4 (or click the “step” ![]() button) some more times while observing the charts

button) some more times while observing the charts

You will see the Virtual Market activity unfold further as new orders are placed by agents. The number of buy and sell orders and trades varies per bar. At every step a forecast for the next bar’s closing price is made. New trading signals will be given when necessary. Because Adaptive Modeler makes a bar-ahead forecast every bar (attempting to predict the direction of bar-to-bar price changes) new signals are sometimes given almost every bar.

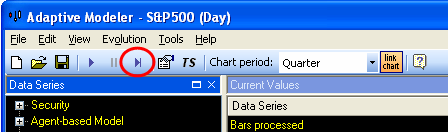

Model

evolution can also proceed continuously. Use the “resume” ![]() and

“pause”

and

“pause” ![]() buttons to resume/pause

model evolution. You can also use the F3 key to resume/pause model evolution.

buttons to resume/pause

model evolution. You can also use the F3 key to resume/pause model evolution.

![]() Press F3 a couple of times to

resume and pause model evolution

Press F3 a couple of times to

resume and pause model evolution

We will now let the model evolve until about 1000 bars. You can see how many bars have been processed in the Current Values window. Use pause/resume (F3) or step (F4) at will. Take your time and observe the charts.

![]() Evolve the model until about 1000

bars have been processed and then pause it again

Evolve the model until about 1000

bars have been processed and then pause it again

Note: Especially during the early (“learning”) stages of model evolution, forecasts sometimes strongly diverge from the security price. Later the forecasts usually tend to stay closer to the security price. Therefore, the Trading Simulator is usually started after a learning period of a number of bars. Adaptive Modeler also offers ways to filter out strongly diverging forecasts (see Significant Forecast Range).

You may have noticed that after 80 bars the “Genome Size Distribution” also started to change. This is caused by the replacement of agents and their trading rules by new agents through breeding. We will talk more about this later.

Also note that the “Population Position” chart (bottom-center) shows the course of the average position of all agents over time.

Note: In case you failed to pause the model around 1000 bars and the model has already evolved much further, you can revert to the model saved at the end of Lesson 2 and try again in order to keep the model in sync with this tutorial. Saving the model at the end of every lesson is recommended for reverting when needed.

Controlling Charts

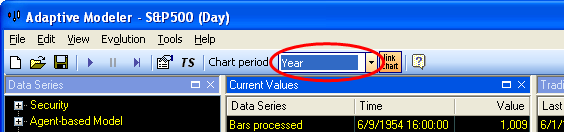

Because older information disappears from the left side of charts during evolution, the charts are now only showing the last quarter of history. This is indicated in the “Chart period” dropdown box in the main toolbar which shows “Quarter”. You can change the chart period to view longer periods:

![]() In the main toolbar, click on the “Chart

period” dropdown box and select “Year”

In the main toolbar, click on the “Chart

period” dropdown box and select “Year”

All the charts (except the distribution histograms) will now show one year of history.

![]() In “Chart period” dropdown box,

select “5 years”

In “Chart period” dropdown box,

select “5 years”

Now all the model’s evolution history so far should be visible.

![]() In “Chart period” dropdown box,

select “Quarter” again

In “Chart period” dropdown box,

select “Quarter” again

To look back at older information without zooming out and losing details, you can simply scroll the charts horizontally:

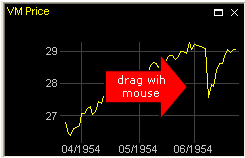

![]() On the “VM Price” chart’s plot

area, click and hold down the left mouse button and drag the chart to the right

to see its history

On the “VM Price” chart’s plot

area, click and hold down the left mouse button and drag the chart to the right

to see its history

When you do this, all the charts will scroll in sync (except the distribution histograms). When you are done viewing the history:

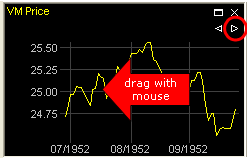

![]() Drag the chart back to its most

recent value again (or click the > button near the top-right corner to jump

there directly)

Drag the chart back to its most

recent value again (or click the > button near the top-right corner to jump

there directly)

Note: A chart must be showing its most recent value in order to get automatically updated during model evolution. (When the most recent value is shown, the < and > buttons are not shown). To learn more about using charts, see Charts.

Lesson 4: Agents

In an agent-based model the most interesting phenomena are generally seen on the population level rather than in individual agents. The purpose of an agent-based model is to study emergent behavior caused by the interaction of many (relatively simple) agents. Likewise, we are more interested in the forecasts (Virtual Market prices) that result from our model than in the life and times of individual agents.

Nevertheless, it is important to know what agents are and how they operate and interact to understand how they influence their environment (the market) and the forecasts. In this lesson we will therefore first look at the details of individual agents before we look at the population as a whole again.

Viewing agent details

To get detailed information of an agent:

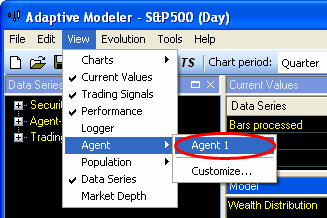

![]() From the “View” menu, choose

“Agent” and then “Agent 1”

From the “View” menu, choose

“Agent” and then “Agent 1”

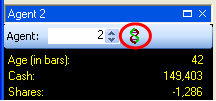

A window titled “Agent 1” has now appeared (bottom-left) showing the following information of agent number 1:

· Age: number of bars processed since agent creation

· Initial wealth: the agent’s wealth at the start of its creation

· Cash: amount of cash owned by agent

· Shares: number of shares owned by agent (negative for short position)

· Wealth: total wealth of an agent (value of cash and shares)

· Position: value of shares as a percentage of wealth (negative for short position)

· Cumulative (excess) return: return since agent creation (excess of security’s return)

· Breeding fitness (excess) return: a short term trailing (excess) return measure (selection criterion for breeding)

· Replacement fitness (excess) return: average (excess) return per bar since agent creation (selection criterion for replacement)

· Transactions: number of transactions an agent has done during its lifetime

· Trade duration: average number of bars between transactions (indicator of an agent’s investment/trading horizon)

· Volatility: volatility of agent wealth (indicator of absolute risk of an agent’s investment/trading style)

· Beta: correlation of agent wealth with security price (indicator of relative risk of an agent’s investment/trading style)

· Generation: genealogical generation number of agent

· Offspring agents: number of offspring agents that this agent has produced

· Genome size: size of genome (trading rule) in number of genes

· Genome depth: number of hierarchical levels in genome

![]() Evolve the model until about 1300

bars (use F3 and/or F4) and watch the agent values change

Evolve the model until about 1300

bars (use F3 and/or F4) and watch the agent values change

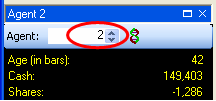

You may notice that Age is sometimes reset to 1. This means that the agent has been replaced by a new agent in the breeding process. In fact, the Agent window shows “slots” in the population. Also note that some agent values only become available when the agent has reached a certain age.

![]() Look at some other agents by

changing the agent (slot) number in the Agent window’s toolbar (by typing or by

using the up and down buttons or keys)

Look at some other agents by

changing the agent (slot) number in the Agent window’s toolbar (by typing or by

using the up and down buttons or keys)

Agent charts

Most agent values can also be followed in charts:

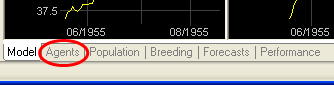

![]() Click on the “Agents” tab (below

the charts)

Click on the “Agents” tab (below

the charts)

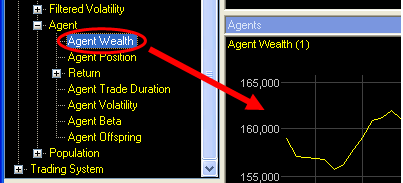

In this window, a chart with the wealth history of agent 1 is already showing. We will add some more charts:

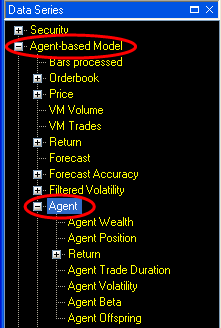

![]() In the Data Series window on the

left, expand the “Agent-based Model” category

In the Data Series window on the

left, expand the “Agent-based Model” category

![]() Expand the “Agent” subcategory

Expand the “Agent” subcategory

In the “Agent” subcategory you will see various agent data series such as “Agent Wealth” and “Agent Position”. To watch the trading activity of an agent:

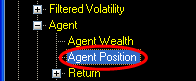

![]() In the Data Series window,

double-click on the “Agent Position” data series name

In the Data Series window,

double-click on the “Agent Position” data series name

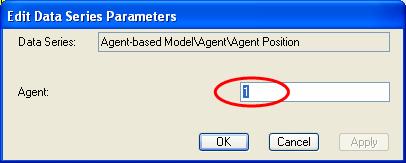

A dialog box “Edit Data Series Parameters” will appear where you can enter the agent number to show:

![]() Enter “1” in the text box and

press ENTER (or click “OK”)

Enter “1” in the text box and

press ENTER (or click “OK”)

A new chart showing the position history of agent 1 has now been added to the Agents window. It doesn’t contain any history yet because this information is not stored for all agents. During model evolution new information will appear in this chart. With this chart it is easy to see the transactions of an agent from the changes in its position.

You can also show information of multiple agents in one chart:

![]() From the Data Series window, drag

and drop the “Agent Wealth” data series name into the chart “Agent Wealth (1)”

From the Data Series window, drag

and drop the “Agent Wealth” data series name into the chart “Agent Wealth (1)”

![]() Enter “2” in the “Edit Data Series

Parameters” and press ENTER

Enter “2” in the “Edit Data Series

Parameters” and press ENTER

The wealth of agent 1 and agent 2 is now shown together in one chart.

![]() From the Data Series window, drag

and drop “Agent Position” into the “Agent Position (1)” chart, enter “2” in the

dialog box and press ENTER

From the Data Series window, drag

and drop “Agent Position” into the “Agent Position (1)” chart, enter “2” in the

dialog box and press ENTER

The position history of agent 1 and agent 2 are now shown together in one chart.

![]() Evolve the model until about 2000

bars and watch the agent charts

Evolve the model until about 2000

bars and watch the agent charts

You may notice dots appearing in the agent charts occasionally. This indicates agent replacement.

Tip: You can show any data series from the Data Series window in a chart in the ways shown above. Up to eight data series can be combined in one chart.

Trading rules

From the Agent window, you can also view an agent’s trading rule.

![]() In the Agent window’s toolbar,

click the “Show genome”

In the Agent window’s toolbar,

click the “Show genome” ![]() button

button

A window will appear showing the agent’s trading rule. Explaining the trading rules is outside the scope of this tutorial. To learn more, see Showing an agent’s genome or Trading Rules.

![]() When you are done viewing the

trading rule, close the trading rule window

When you are done viewing the

trading rule, close the trading rule window

![]() Close the Agent window by clicking

the x button in its top-right corner

Close the Agent window by clicking

the x button in its top-right corner

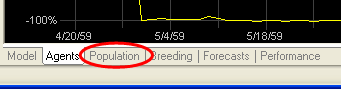

Viewing the population

You are already familiar with the wealth and position distribution histogram charts. These charts give only a glimpse of the diversity of agents. A more extensive visualization of the agent population is provided by the Population window where multiple agent values can be plotted against each other.

![]() Click on the “Population” tab

(below the charts)

Click on the “Population” tab

(below the charts)

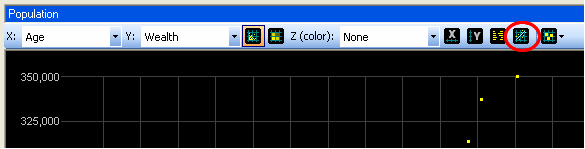

The Population window is now showing and contains a scatter plot of agent Wealth against agent Age. As is shown in the toolbar, Age is shown on the X-axis and Wealth on the Y-axis. Every dot represents one agent.

![]() In the Population window’s

toolbar, click the “Show Correlation and Regression”

In the Population window’s

toolbar, click the “Show Correlation and Regression” ![]() button

button

Correlation and regression information will appear and a regression line is drawn. In general, there will be a positive correlation between Age and Wealth because evolutionary selection pressure will let successful agents stay in the population longer than unsuccessful ones.

![]() In the Population window’s

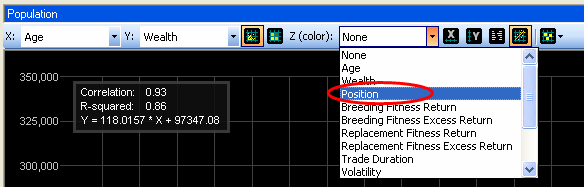

toolbar, click on the “Z (color):” dropdown box and select “Position”

In the Population window’s

toolbar, click on the “Z (color):” dropdown box and select “Position”

The color of the agents now indicates their position; blue for long positions and red for short positions (see the legend above the plot area). During model evolution you will notice agents changing color. Some agents are holding a long position most of the time, others a short position and others frequently switch positions.

![]() Evolve the model until about 2500

bars while watching the Population window

Evolve the model until about 2500

bars while watching the Population window

Let’s look at some other agent values:

![]() In the Population window’s

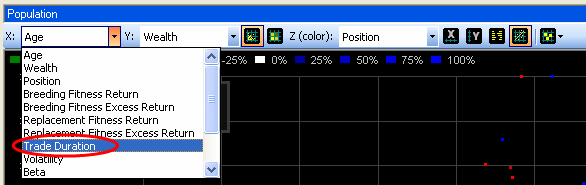

toolbar, click on the “X:” dropdown box and select “Trade Duration”

In the Population window’s

toolbar, click on the “X:” dropdown box and select “Trade Duration”

Trade Duration is the average number of bars between successive transactions of an agent.

![]() Click the “Show Correlation and

Regression”

Click the “Show Correlation and

Regression” ![]() button again to remove

correlation and regression information

button again to remove

correlation and regression information

![]()

Agent Wealth is now plotted against Trade Duration. Agents with low trade duration (“active traders”) are on the left and those with high trade duration (“long term investors”) are on the right.

![]() Evolve the model until about 3000

bars and watch the Population window

Evolve the model until about 3000

bars and watch the Population window

You may notice that some agents are moving to the right as their trade duration increases over time (“long term investors”) and others are staying on the left while frequently changing their Position as is visualized by color changes (“active traders”).

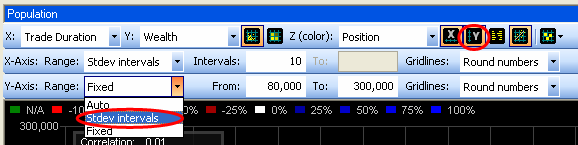

Hundreds of different scatter plots can be made from all the possible combinations of agent values. A quick way to see some of them is to browse through the “X:”, “Y:” or “Z:” dropdown boxes. To ensure that the most relevant region of the plot is automatically shown for every combination, the axes ranges can automatically be set to a number of standard deviations around the mean value:

![]() In the Population window’s

toolbar, click the “X-Axis settings”

In the Population window’s

toolbar, click the “X-Axis settings” ![]() button

button

![]() In the X-axis toolbar that has

just appeared, click on the “Range:” dropdown box and select “stdev intervals”

In the X-axis toolbar that has

just appeared, click on the “Range:” dropdown box and select “stdev intervals”

![]() In the Population window’s

toolbar, click the “Y-Axis settings”

In the Population window’s

toolbar, click the “Y-Axis settings” ![]() button

button

![]() In the Y-axis toolbar, click on the

“Range:” dropdown box and select “stdev intervals” as well

In the Y-axis toolbar, click on the

“Range:” dropdown box and select “stdev intervals” as well

![]() In the Population window’s

toolbar, click the “X-Axis settings”

In the Population window’s

toolbar, click the “X-Axis settings” ![]() and “Y-Axis settings”

and “Y-Axis settings” ![]() buttons once more to

hide the axis toolbars

buttons once more to

hide the axis toolbars

![]() Now double-click on any of the

“X:”, “Y:” or “Z:” dropdown boxes and use the mouse wheel (or UP and DOWN

arrow keys) to browse through different plots

Now double-click on any of the

“X:”, “Y:” or “Z:” dropdown boxes and use the mouse wheel (or UP and DOWN

arrow keys) to browse through different plots

![]() Evolve the model until about 6000

bars while browsing through different plots at will

Evolve the model until about 6000

bars while browsing through different plots at will

Tip: An interesting plot is “Offspring” for X, “Genome size” for Y and “Beta” for Z. The horizontal position of agents now indicates the total number of their offspring agents (which in general correlates strongly with long term return). When an agent moves to the right, it is creating offspring agents and must therefore be among the agents with highest Breeding Fitness Return (which is a short term return indicator). The Genome size itself is not of major interest here but is used to distribute agents vertically over the chart for clarity. Because the genome size doesn’t change during an agent’s life, the vertical position stays fixed which makes it easy to follow individual agents.

Breeding

Breeding is the process of creating new offspring agents from some of the best performing agents to replace some of the worst performing agents. At every bar, agents with the highest “Breeding Fitness Return” are selected as parents. Copies of the genomes (trading rules) of pairs of these parents are then recombined through genetic crossover to create new genomes that are given to new offspring agents. These new agents replace agents with the lowest “Replacement Fitness Return”.

The breeding frequency and the number of agents to be replaced can be controlled by parameters to influence the adaptability versus the stability of the model. Changing these parameters is outside the scope of this tutorial but we will look at how some of the consequences of the breeding process can be observed.

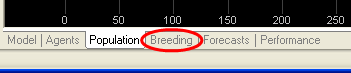

![]() Click on the “Breeding” tab (below

the Population window)

Click on the “Breeding” tab (below

the Population window)

![]() In the main toolbar, set the

“Chart period” to “25 Years”

In the main toolbar, set the

“Chart period” to “25 Years”

The “Creations” chart (top-left) shows the number of agents created per bar. After some heavy initial fluctuations this value stabilizes to 20 agents per bar (1% of the population). (The initial fluctuations are because the number of agents that can be created/replaced depends on agent age). To keep the population size fixed, the number of replaced agents is always equal to the number of new created agents.

The “Avg Agent Age” chart shows the average age of all agents. This gives an indication of the level of experience present in the population. Sudden drops may indicate a sudden change in the market that resulted in a wipe-out of experienced agents. The “Age distribution” histogram shows what age groups currently exist in the population.

The “Avg Genome Size” shows the average size of the genomes over time. Also recall the Genome Size Distribution histogram on the Model tab. Through the process of recombining and mutating genomes for the creation of new agents, the average genome size changes. Bigger genomes may contain more complex algorithms and may be able to look back further in history but do not always necessarily perform better.

![]() Evolve the model until the end of

the quote file while observing the Model, Agents, Population or Breeding window

at will (at any Chart period)

Evolve the model until the end of

the quote file while observing the Model, Agents, Population or Breeding window

at will (at any Chart period)

Lesson 5: Evaluating forecasting abilities

So far we have looked inside the model extensively but we have not looked at how well it is doing what it is supposed to do, forecasting prices. To evaluate the forecasting abilities of a model, a number of indicators are available.

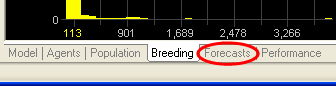

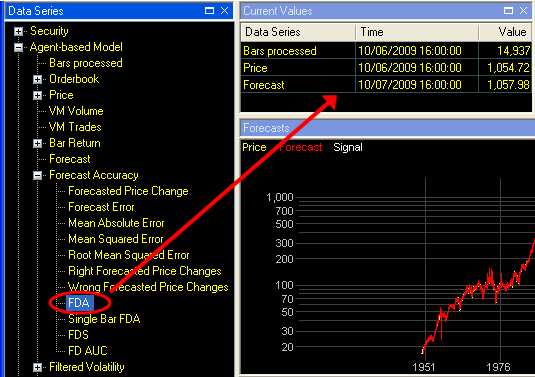

![]() Click on the “Forecasts” tab

(below the main window)

Click on the “Forecasts” tab

(below the main window)

![]() In the main toolbar, set the

“Chart period” to “Quarter”

In the main toolbar, set the

“Chart period” to “Quarter”

A simple but intuitive way to observe past forecasts is provided by the “Right Forecasted Price Changes” (blue) and “Wrong Forecasted Price Changes” (red) in the top-center chart. These series show the security price using blue for price changes whose direction was forecasted right and red for those that were forecasted wrong. This visualizes how many price changes were forecasted right and wrong as well as their magnitude.

A more quantitative way to evaluate forecasts is provided by the “FDA” chart (top-right). FDA stands for “Forecast Directional Accuracy”. This indicator shows the percentage of bars for which the forecasted bar-to-bar price change was in the right direction. Values above 50% indicate that more often than not the direction of price change was forecasted correctly. The chart shows a 100 day trailing FDA during the last quarter.

To see how the FDA evolved during the entire model history:

![]() In the main toolbar, set the

“Chart period” to “100 Years”

In the main toolbar, set the

“Chart period” to “100 Years”

On such long time scales you may want to calculate a trailing FDA over more bars:

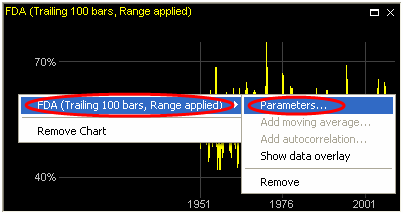

![]() Right-click on the “FDA” chart

Right-click on the “FDA” chart

![]() In the context menu, open the data

series submenu and click “Parameters…”

In the context menu, open the data

series submenu and click “Parameters…”

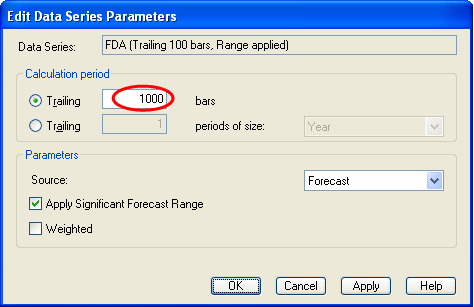

![]() In the dialog box, enter “1000” at

“Trailing … bars” and click “OK”

In the dialog box, enter “1000” at

“Trailing … bars” and click “OK”

The chart now shows a 1000 day trailing FDA. You may notice that FDA varies over time. To get the average FDA over the entire model history:

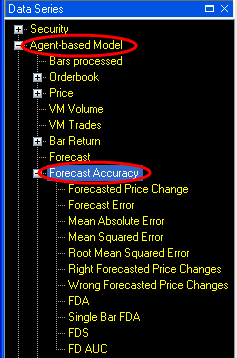

![]() In the Data Series window, expand

the “Agent-based Model” category and expand the “Forecast Accuracy” subcategory

In the Data Series window, expand

the “Agent-based Model” category and expand the “Forecast Accuracy” subcategory

![]() In the “Forecast Accuracy”

subcategory, locate the “FDA” data series and drag and drop it into the Current

Values window

In the “Forecast Accuracy”

subcategory, locate the “FDA” data series and drag and drop it into the Current

Values window

![]() In the “Edit Data Series

Parameters” dialog box, select “Since model start” and click “OK”

In the “Edit Data Series

Parameters” dialog box, select “Since model start” and click “OK”

The FDA since model start is now shown in the Current Values window.

Tip: You can drag any data series from the Data Series window into the Current Values window.

For a more complete evaluation of forecasting abilities, other indicators in the “Forecast Accuracy” subcategory of the Data Series window should be observed as well. This is outside the scope of this tutorial.

Tip: To learn more about any data series in the Data Series window, click on (or select) the data series name and press Shift-F1.

To conclude whether or not a model with forecasting abilities will be useful for trading, the performance of trading based on the trading signals needs to be evaluated. This performance does not only depend on forecast accuracy but also on volatility and transaction costs. In the next lesson we will see how to evaluate performance with the Trading Simulator.

Lesson 6: Trading Simulator

The Trading Simulator simulates trading based on the trading signals and the user’s trading preferences. It can be used to calculate returns and other performance indicators.

![]() Click on the “Forecasts” tab and

set the “Chart period” to “100 Years”

Click on the “Forecasts” tab and

set the “Chart period” to “100 Years”

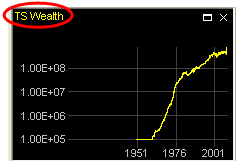

The “TS Wealth” chart (middle-left) shows the Trading Simulator Wealth[14]. To get its exact current value:

![]() Click on the data series name (“TS

Wealth”) above the chart

Click on the data series name (“TS

Wealth”) above the chart

A data overlay will be shown on the chart with the value at the current bar.

Tip: You can get a data overlay for any data series in a chart by clicking on its name above the chart. Click once more to hide it again. To learn more see data overlay.

At model creation, Trading Simulator Wealth starts with the Start Capital as specified on the “Trading System” tab of the model configuration (100,000). While the Trading Simulator is enabled, it simulates trading according to the signals. Its wealth is adjusted accordingly, taking into account the broker commission, spread and slippage settings as specified on the “Trading System” tab. Recall from lesson 1 that the Trading Simulator was set to start trading automatically after 2500 bars (around 1960).

Tip: You can manually enable or disable the Trading Simulator during

model evolution with the “TS” ![]() button on the main

toolbar. You can also change Trading System settings and other model parameters

during model evolution using the “Model Configuration”

button on the main

toolbar. You can also change Trading System settings and other model parameters

during model evolution using the “Model Configuration” ![]() button on the

main toolbar.

button on the

main toolbar.

The middle-center chart shows a 1 year trailing return of the security (in yellow) together with a 1 year trailing return of the Trading Simulator (in red). The middle-right chart shows the excess 1 year trailing return of the Trading Simulator over the security[15].

The bottom-left chart shows the historical volatility of the security (in yellow) with the historical volatility of Trading Simulator wealth (in red). The volatility of Trading Simulator wealth is often somewhat lower than that of the security because the alternation of long, short and cash positions tends to temper volatility.

The bottom-center and bottom-right charts show the Trading Simulator position and its number of transactions per day[16]. On a period of 100 years these charts are not very clear. We will therefore add moving averages:

![]() Right-click on the “TS Position”

chart

Right-click on the “TS Position”

chart

![]() In the context menu, open the

submenu for the data series and click “Add moving average…”

In the context menu, open the

submenu for the data series and click “Add moving average…”

![]() In the “Edit Data Series

Parameters” dialog box enter “1000” at “Bars” and click “OK”

In the “Edit Data Series

Parameters” dialog box enter “1000” at “Bars” and click “OK”

A 1000 day moving average of the Trading Simulator position has now been added to the chart (in red).

![]() In the same

way, add a 1000 day moving average to the “TS Transactions” chart

In the same

way, add a 1000 day moving average to the “TS Transactions” chart

Tip: You can add moving averages to most charts.

An overview of the Trading Simulator’s performance with various risk and return indicators is provided by the Performance Overview:

![]() Click on the “Performance” tab

(below the Model window)

Click on the “Performance” tab

(below the Model window)

The

Performance Overview is now showing. You can change the calculation period and

some other settings by clicking on the “Performance Calculation Settings” ![]() button at the top of the

Performance Overview. To learn more, see Performance

Overview.

button at the top of the

Performance Overview. To learn more, see Performance

Overview.

Note: Model evolution, forecast accuracy and performance depend in part on random factors that are inherent to agent-based modeling and genetic programming. The model created in this tutorial will therefore be different every time it is created. In general it is recommended to do multiple runs using the same model configuration for a more complete overview of possible results.

Tip: For a more extensive analysis of the potential range of returns under varying conditions based on assumed values of FDA and volatility, the Statistical Simulations can be used.

Lesson 7: Creating your own models

This tutorial concludes with some guidelines for creating your own models. When creating your own models, a few things will usually be different than as shown in this tutorial.

Market data

Market data needs to be provided and may need to be converted to one of Adaptive Modeler’s supported formats. See 4. Market data for more information about this.

Model Configuration

At least the following model parameters should be checked/adjusted to correspond with the security that you are modeling and with your preferences (more about model configuration is explained in 5. Model configuration):

On the “General” tab:

- After selecting a quote file, the “Model evolution start date/time” will automatically be set at the earliest possible start time (a certain number of bars after the first quote in the quote file). It is possible to set the start date/time to a later date/time.

- Verify that the “Market Trading Hours” match the actual trading hours of the security and correct them if necessary.

On the “Model” tab:

- Under Rounding, make sure that the number of decimal digits and minimum price increment unit are correct.

In the “Gene Selection”:

- Enable/disable the open, high, low, bid, ask genes depending on which data is included in the quote file and whether or not agents should be able to see it. (Note that bid and ask also apply to the Virtual Market).

- Enable/disable volume related genes depending on whether or not volume data is included in the quote file and whether or not agents should be able to see it.

- Enable/disable genes related to custom input variables depending on whether or not these are included in the quote file and whether or not agents should be able to see them.

On the “Trading System” tab:

- Check or uncheck “Allow short positions” and other trading signal generation settings as desired.

- Enter a Start Capital for the Trading Simulator.

- Enter the correct broker commissions, spread and slippage values for the security.

Model evolution

By default, the “Pause model at start” option is disabled so model evolution starts immediately after initialization. Often you may want to evolve a model first until present day without interruptions and then analyze the results afterwards (also see Computation performance issues). However, if you want to observe model evolution “live” using the Population window (or other data that is not stored historically) you can use the pause, step and resume functions.

Style

The default style that Adaptive Modeler uses is not the same as the style that was used for this tutorial and does not contain the same charts. However, you can select any style to be the default style or even create your own default style. To learn more, see Styles.

3. How does Adaptive Modeler work?

Adaptive Modeler consists of two main parts: the Agent-based Model and the Trading System. In short, the Agent-based Model receives quotes and produces price forecasts and the Trading System decides when a new trading signal should be given based on the forecasts and the user’s trading preferences.

3.1 Agent-based Model

The Agent-based Model consists primarily of a population of agents and a Virtual Market where agents can trade the security. An agent is an autonomous entity representing a trader (or investor) with its own assets (cash and/or shares) and its own trading strategy.

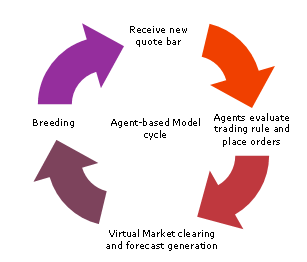

After initialization, a new model starts evolving by executing its regular cycle for every received quote bar as shown below.

After a new quote bar has been received, agents can place a new order or remain inactive according to their trading strategy. After all agents have evaluated their trading strategy, the Virtual Market determines the clearing price, executes all executable orders and releases the price forecast for the next bar. Finally, breeding of new agents and replacement (by evolutionary operations such as crossover and mutation) can take place. This process then repeats itself for the next bar.

Note that in most cases (depending on model settings) the agent-based model is not a closed economy. The total amount of money in the model may vary because of agent replacements[17] and because of broker commissions charged to agents. New agents get an initial wealth (according to the Agent Initialization settings) that is usually different (higher) than the wealth of the replaced agents. On average this has an increasing effect on the total amount of money. When broker commissions are charged to agents these amounts are being discarded, which has a decreasing effect on the total amount of money. The total amount of money in the model can be observed with the Population Cash data series. Also the total number of shares that exist in the model can vary because of agent replacements. New agents get an initial shares position according to the Agent Initialization settings while the shares of the replaced agent are being discarded. The total number of shares in the model can be observed with the Population Shares data series.

3.1.1 Agent Population

At model initialization a population of agents is created according to the model parameters specified by the user. These parameters include the Population Size and the Agent initialization settings. The population size is generally thousands of agents. Upon creation, each agent receives a starting capital consisting of cash and/or shares depending on the distribution methods selected in the Agent initialization settings. Each agent also receives a trading rule (its “genome”) that is randomly created according to the Genome Settings.

After all initialization processes, the agent population will evaluate its trading rules, trade and breed according to the Agent-based Model cycle.

3.1.1.1 Trading rules

The trading rules use historical market data as input. This can be price or volume data of the security (either on the Real or Virtual Market) or custom input variables. According to their internal logic they return an “advice” consisting of a desired position (as a percentage of wealth) and an order limit price for buying or selling the security. A market order can also be indicated. The internal logic of the trading rules is built from several functions such as:

- market data access functions

- average, min, max functions on historical market data

- logical and comparison operators

- some basic Technical Indicators

The trading rules are created by genetic programming technology. Through evolution, the trading rules are driven to use those input data and functions that provide the most predictive value. For more information about how the trading rules are constructed, see III.2 Genetic programming in Adaptive Modeler.

3.1.1.2 Order generation

Adaptive Modeler translates the output of an agent’s trading rule (the “advice”) into a buy or sell order by comparing the desired position with the agent’s current position and calculating the number of shares that need to be bought or sold. If shares need to be bought or sold, an order will be generated to buy/sell the required number of shares, using the limit price or market order indication included in the advice.

Example:

An agent owns 1000 shares of the security and 80,000 in cash. The security’s price is 38,50. The agent’s wealth is therefore 118,500 and its position is 32.5%. If its trading rule returns an advice for a position of 50% and a limit price of 38,50, then a limit order will be generated to buy 539 (= 50% * 118,500 / 38,50 - 1000) additional shares for a limit price of 38,50.

Some implementation details:

- The example given above is simplified. Actually, broker commission costs are included in the calculation as well.

- Before translating an advice into an order, the desired position is limited to 100% of wealth for long position and -100% of wealth for short positions so that no positions are being entered that exceed these limits. Also an initial margin check is performed to verify that the agent’s new position (after the intended order would be executed) will still be within [-100%, 100%] of the agent’s new wealth, taking into account the difference between the order limit price and the last (Real Market) security price. If not, the number of shares to buy or sell will be reduced accordingly. (Note that an existing short position of an agent may later become less than -100% of wealth through an increase in the security price; also see Margin maintenance below).

- If the difference between the desired position and the current position is less than half the Minimum position increment, no order is generated. This ensures that no order is being generated when the desired position is already equal to or close enough to the current position.

- If an order does not get executed on the Virtual Market, the agent that placed the order will cancel the order before evaluating its trading rule again. If the new advice from the trading rule still requires a buy or sell order, an order will be placed again. This ensures that each agent can have only one order open at a time.

- Depending on the Fractional shares setting, agents can trade fractional shares or only whole numbers of shares.

- A long position can be changed into a short position and vice versa through a single transaction. In this case the fixed broker commission will be charged twice.

3.1.1.3 Margin maintenance

If the current position of an agent becomes less than -150% of its wealth (as a consequence of an increase in the security price), the agent will receive a “margin call”. This means that a buy order will be generated with a limit price set to 5% above the most recent price on the Virtual Market. (The desired position will be based on the current advice as normal since this is always at least -100%). Note that this mechanism does not guarantee that the short position will become higher than -150%. The number of margin calls occurring can be monitored with the Margin Calls data series.

3.1.1.4 Default management

If an agent's wealth becomes negative, it is forced to close any open position (regardless of available cash) by placing an order with a limit price 5% higher/lower than the most recent price on the Virtual Market. Once their position is closed, defaulted agents will be replaced in a special “defaults replacement” procedure by random created agents (if they weren't already replaced by the breeding operation). Note that defaulted agents may continue to exist as long as their position can not be fully closed and they are not being replaced by the breeding operation. It is theoretically possible that a defaulted agent (that has not yet been removed) recovers and gets positive wealth again. In that case it continues to exist as normal. The number of defaults occurring can be monitored with the Defaults data series.

3.1.2 Virtual Market

The Virtual Market is a simulated double auction batch market where all buy and sell orders from agents are collected. Every bar, after all agents have evaluated their trading rule and placed their order (if any), the Virtual Market calculates the clearing price. The clearing price is the price at which the highest trading volume from limit orders can be matched[18]. The Market Depth window shows the depth of the orderbook before and after clearing. There is no market maker. When the total number of shares offered (at or below clearing price) exceeds the total number of shares asked (at or above clearing price) or vice versa, the remaining orders will not be (fully) executed. In this case, orders at the clearing price will be selected for execution with priority for market orders over limit orders and then on a first‑in‑first‑out (FIFO) basis. Orders can be partially executed. In case there are no matching limit orders at all, no market orders will be executed either.

The forecast for the real market price can be based either on the clearing price of the Virtual Market (Virtual Market Price) or on only the buy and sell orders of a dynamically changing group of the best performing agents (Best Agents Price). In the default configuration the Virtual Market Price is used. The rationale for using the Virtual Market Price as an indicator for future prices is as follows: Because of the volume weighted clearing price computation mechanism, wealthier (more successful) agents (who will generally place bigger orders) will have a bigger influence on the market price than less wealthy (less successful) agents. This way the forecast calculation mechanism has a preference for successful trading strategies but still includes a high number of diverse trading strategies. The latter is needed to make the forecasting mechanism more robust to changes in market behavior since previously successful trading strategies are not guaranteed to remain successful in the future.

In some cases, using the Best Agents Price as the forecast may result in better forecasts. Other than that, it may be interesting to compare the forecasting abilities of the entire Virtual Market with those of a (much smaller) group of only the best performing agents.

3.1.3 Breeding

Breeding is the process of creating new agents to replace poor performing agents. Breeding occurs by selecting pairs of well performing agents (parents) and producing new genomes by recombination of the parent genomes through a crossover operation. In a crossover operation, the parent genomes are copied and then a randomly chosen part of the copied genome of one parent gets exchanged for a randomly chosen part of the copied genome of the other parent. The resulting two new genomes are used to create two new agents. Breeding can occur every bar or every n bars where n is the breeding cycle length.

3.1.3.1 Selection of parents

Parents are selected according to the parameters Minimum breeding age, Eligible selection, Parents group and Parent selection method. First the eligible selection is made. This is a temporary sub population in which breeding and replacement will take place. The eligible selection consists of agents of minimum breeding age or older, either all of them or a random selection of specified size. Then the best performing agents (judged by Breeding Fitness Return) of the eligible selection are selected as parents using the specified Parent selection method. The number of parents selected is specified by the Parents group size parameter. For more information about the selection process, see the parameter descriptions.

3.1.3.2 Creating offspring

The selected parents are randomly grouped in pairs. For each pair the crossover operator picks a random node in a copy of one parent’s genome and then selects another random node (of the same type) in a copy of the other parent’s genome. The subtrees starting at the selected nodes are then swapped.

To be accepted, the resulting offspring genomes must meet the Maximum Genome Size and Maximum Genome Depth constraints and must be different than their parent genomes. The crossover operator will attempt to exchange subtrees that are big enough to meet the specified Preferred minimum number of nodes. If Create unique genomes is checked, the offspring genomes must also be unique within the current group of parents and offspring. If not accepted, the crossover is retried until acceptable offspring genomes have been created or a time limit is exceeded. (In some cases crossover may not be possible, resulting in less offspring agents being created).

The resulting offspring genomes may then be mutated, according to the probability given in the Mutation probability parameter. Finally, two new agents are created and provided with the offspring genomes and a starting capital consisting of cash and/or shares according to the distribution methods selected in the Agent initialization settings.

3.1.3.3 Replacing agents

The created offspring agents will replace the worst performing agents of the eligible selection (judged by Replacement Fitness Return). Note that the number of agents that are being replaced is always equal to the number of offspring agents that have been created. This is to keep the population size stable.

3.1.4 Model Evolution

In the early stage of model evolution the forecast may sometimes diverge strongly from the security’s price. In this case the Virtual Market is acting chaotic because of an imbalance in supply and demand. This imbalance is caused by the fact that at model start the initial position of agents may not be in line with their trading rules. Therefore the agents first need to align their portfolio with the advice from their trading rule which can cause heavy trading volumes and big price changes.

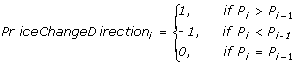

As the model evolves two things will happen: First, agents that happen to have a well performing trading rule will become wealthier than less fortunate agents. Because they are wealthier they will have more influence on the Virtual Market price (the forecast) which presumably is beneficial to the accuracy of the forecast. Second, the trading rules will improve by natural selection as breeding creates new trading rules from the best performing rules, replacing the worst performing rules. For the purpose of selecting agents for breeding, the agent fitness is determined by the Breeding fitness return data series. For the purpose of selecting agents to be replaced, the agent fitness is determined by the Replacement fitness return data series.